Parallel Processing with Java Virtual Threads

In 2023, virtual threads were officially added to the Java JDK. They are described as “lightweight threads that reduce the effort of writing, maintaining, and debugging high-throughput concurrent applications.” In this article, I’ll give an overview of how virtual threads work, then discuss the pros and cons of different virtual threading implementations.

Virtual Threads Overview

Virtual threads differ from traditional platform threads in how they are scheduled to run on their corresponding OS threads. When a platform thread is started, a system call is made to allocate resources for an OS thread and register it with the scheduler. The platform thread then runs on this OS thread for the entirety of its lifespan. When the platform thread finishes running, the resources are returned to the OS.

By contrast, virtual threads maintain a pool of OS threads, known as carrier threads. When a virtual thread starts, it’s scheduled to run on one of the carrier threads - so there’s no need to make an expensive system call. This is similar to how a platform thread pool works, in that a group of OS threads are reused to execute a set of tasks.

Virtual threads run on their carrier threads until they make a blocking I/O call, at which point the JVM may take the virtual thread off the carrier thread to free up space for another virtual thread. When the I/O call returns, the virtual thread is scheduled to resume on one of the carrier threads. This makes efficient use of carrier threads when the tasks are mostly I/O bound. It also means that, unlike platform thread pools, the number of concurrent tasks can exceed the number of allocated OS threads.

Virtual Threading Implementations

Both platform threads and virtual threads run on OS threads, so their actual code execution time is the same. However, the reduced overhead of creating virtual threads, along with their scheduling mechanism, means virtual threading implementations can have improved performance over platform threading implementations.

The remainder of this article will discuss the pros and cons of different virtual threading implementations for a common scenario: A big list of tasks that should be processed in parallel. For the sake of simplicity, the tasks in these examples are of type Runnable and will not throw an exception.

Thread-Per-Task

While spinning up thousands of concurrent platform threads will usually lead to an error of some sort, it can be a viable solution with virtual threads. This implementation, called thread-per-task, uses a virtual thread executor to schedule each task.

1public void parallelProcessThreadPerTask(List<Runnable> tasks) {

2 try (ExecutorService executor = Executors.newVirtualThreadPerTaskExecutor()) {

3 for (Runnable task : tasks) {

4 executor.execute(task);

5 }

6 }

7}

Thread-per-task works best when the tasks are lightweight, relatively small in number, and contain blocking I/O calls. The virtual threads can be taken off the carrier threads when they are waiting for an I/O call to return, freeing up the carrier threads to execute other tasks.

The main downside to thread-per-task is that there’s no well-defined limit to the number of concurrent tasks. This can lead to out-of-memory errors if the tasks use a lot of memory, or rate limit exceptions if the tasks all call the same API. It’s also not a best practice to have the database connection pool throttling your DB calls, as this should usually be done upstream. In these cases, it’s better to specify a maximum number of concurrent tasks.

Specified Number of Concurrent Tasks

To define a maximum number of concurrent tasks with virtual threads, simply limit the number of virtual threads that can exist at a given time. One of the easiest ways to accomplish this is to split the list of tasks evenly into groups, then assign each thread a group of tasks to run in sequence. This works well when all tasks take the same amount of time to execute, however it’s less efficient when execution time varies from task to task.

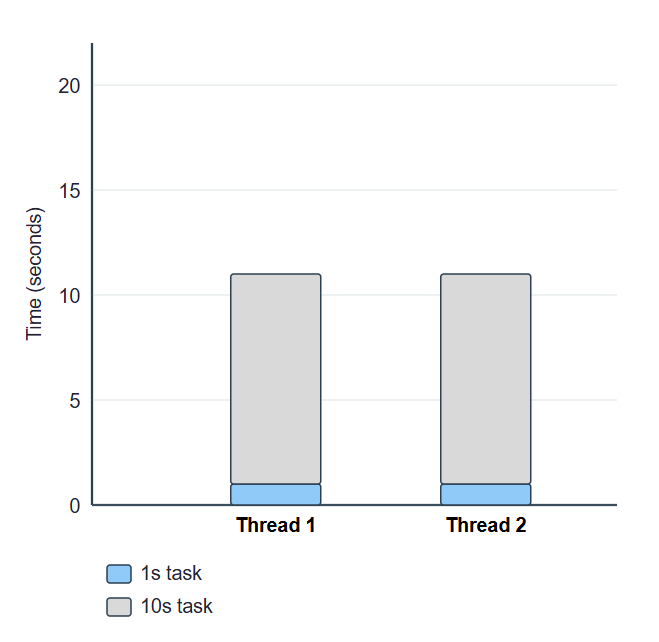

To illustrate this, I’ll use a simple example where there are 2 threads and 4 tasks. 2 of the tasks take 1 second to run, while the other 2 take 10 seconds to run. If the tasks are split into groups, then the best-case scenario is each group contains a 1 second task and a 10 second task. Total execution time in this scenario is 11 seconds.

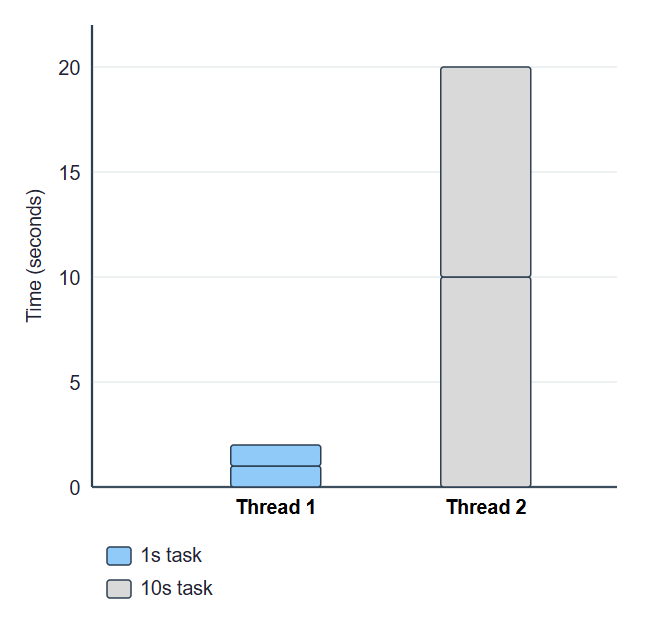

In the worst-case scenario, one group contains both 1 second tasks, while the other group contains both 10 second tasks. Total execution time in this scenario is 20 seconds.

This also applies to creating batches of threads that each execute one task, then waiting for the batch to complete before starting the next batch. In the worse-case scenario, each batch contains a 10 second task, so the total time is 20 seconds.

Processing Tasks from Queue

When processing time varies from task to task, a more efficient implementation is to poll tasks from a queue. Here, a task is polled from the queue and executed whenever a thread is available. This process repeats itself until the queue is empty.

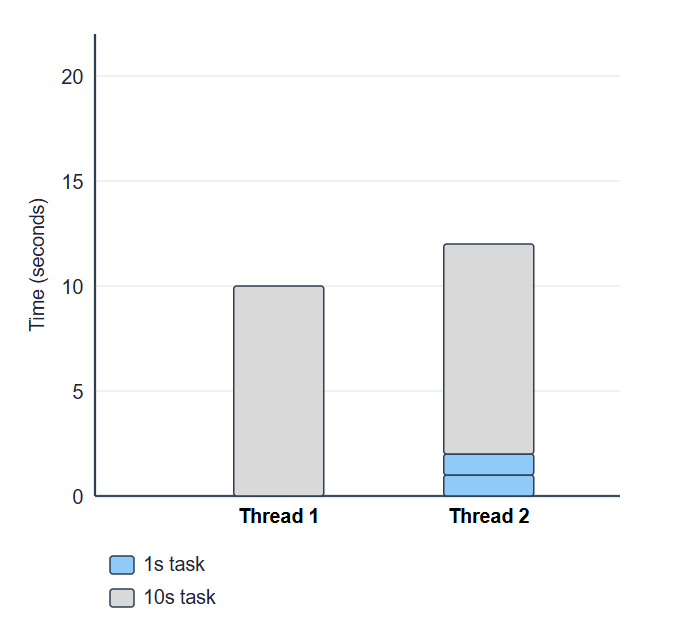

Using the same example as above with 2 threads and 4 tasks, the best-case scenario here is the same with a total execution time of 11 seconds. However, the worst-case scenario only takes 12 seconds to execute.

Queue Processing Implementations

For platform threads, one of the simplest queue processing implementations is to use a thread pool.

1public void parallelProcessThreadPool(List<Runnable> tasks, int maxConcurrentTasks) {

2 ExecutorService executor = Executors.newFixedThreadPool(maxConcurrentTasks);

3 for (Runnable task : tasks) {

4 executor.execute(task);

5 }

6 executor.shutdown();

7}

In this implementation, calling executor.execute() for each task adds the tasks to the thread pool’s built-in queue. The number of threads in the pool defines the maximum number of concurrent tasks.

Platform thread pools offer the same performance boost from reusing OS threads that virtual threads do. However, the number of concurrent tasks is limited by the pool size. For I/O bound tasks, it can be beneficial to allow the number of concurrent tasks to exceed the number of allocated OS threads, while still placing a limit on the number of concurrent tasks. This can be accomplished with virtual threads.

Queue Processing Implementation - Semaphore

Virtual threads have a JVM setting to define the maximum number of carrier threads, but there’s no setting to define the maximum number of concurrent tasks. So that’s implemented in code. One common implementation is to use a semaphore.

1public void parallelProcessSemaphore(List<Runnable> tasks, int maxConcurrentTasks) {

2 Semaphore permits = new Semaphore(maxConcurrentTasks, true);

3 for (Runnable task : tasks) {

4 permits.acquireUninterruptibly();

5 Thread.startVirtualThread(() -> {

6 task.run();

7 permits.release();

8 });

9 }

10}

Here, the semaphore has a set of permits, and each thread must wait for a permit before starting. When the task is complete, the permit is released for another thread to acquire. This ensures the number of concurrent tasks doesn't exceed the specified limit. The “queue” in this implementation is the list of tasks in the for-loop that are waiting for permits.acquireUninterruptibly() to return before running.

Queue Processing Implementation – Concurrent Queue

Another implementation is to create a specified number of threads, then have each thread poll tasks from a concurrent queue.

1public void parallelProcessQueue(List<Runnable> tasks, int maxConcurrentTasks) {

2 Queue<Runnable> taskQueue = new ConcurrentLinkedQueue<>(tasks);

3 for (int i = 0; i < maxConcurrentTasks; ++i) {

4 Thread.startVirtualThread(() -> {

5 while (true) {

6 Runnable task = taskQueue.poll();

7 if (task == null) {

8 break;

9 }

10 task.run();

11 }

12 });

13 }

14}

Here, each thread has a loop that polls and executes tasks from the queue. Once the queue is empty, the thread exits. This implementation is similar to the semaphore implementation, with the queue being the ConcurrentLinkedQueue and the configured number of threads defining the maximum number of concurrent tasks.

Conclusion

Virtual threads add another tool to the Java concurrency toolbox. While platform threads offer comparable performance and simplicity in many scenarios, virtual threading implementations can be faster and more efficient for I/O bound tasks. I've seen them work as advertised in production, so no complaints from me.